Shiki is a beautiful syntax highlighter that uses TextMate grammars and themes, the same engine that powers VS Code, to provide the most accurate and beautiful syntax highlighting for your code snippets. It's created by Pine Wu back in 2018. It starts as an experiment to use Oniguruma to do syntax highlighting. Different from existing syntax highlighters like Prism and Highlight.js that designed to run in the browser, Shiki took an interesting approach to do the highlighting ahead of time. It ships the static, already highlighted HTML to the client, producing one of the most accurate and beautiful syntax highlighting thanks to the TextMate grammars and themes, while ships zero JavaScript to the client. It soon took off and becomes a very popular choice, especially for static site generators and documentation sites.

While Shiki is awesome, it's still a library that designed to be ran on Node.js. This means it a bit limited to only highlight static code and would find trouble with dynamic code, because it won't work in the browser. This is also because Shiki relies on the WASM binary of Oniguruma, as well as a bunch of heavy grammars and themes files in JSON. It depdents on Node.js filesystem and path resolution to load these files, which is not accessible in the browser.

To improve that situation, I started this RFC that are later landed with this PR and shipped in Shiki v0.9. While it abstracted the file loading layer to use fetch or filesystem based on the environment, it's still quite complicated to use as you need to serve the grammars and themes files somewhere in your bundle or CDN manually and call the setCDN method to tell Shiki where to load these files.

The solution is not perfect but at least it made possible to run Shiki on browser to highlight dynamic content. We have been using that approach since then - until the story of this article begins.

The Start

Nuxt is putting a lot effort on pushing the web to the edge, which could make the web more accessible with lower latency and better performance. Edge hosting services like CloudFlare Workers are deployed all over the world, like CDN servers, users can get the content from the nearest edge server, without the round trips to the origin server which could be thousands of miles away. With the awesome benefits it provides, it also comes with some trade-offs. Edge servers usually use more restricted runtime environment, for example, CloudFlare Workers does not support file system access and usually don't preserve the state between requests. While Shiki's main overhead is loading the grammars and themes upfront, that wouldn't work well in the edge environment.

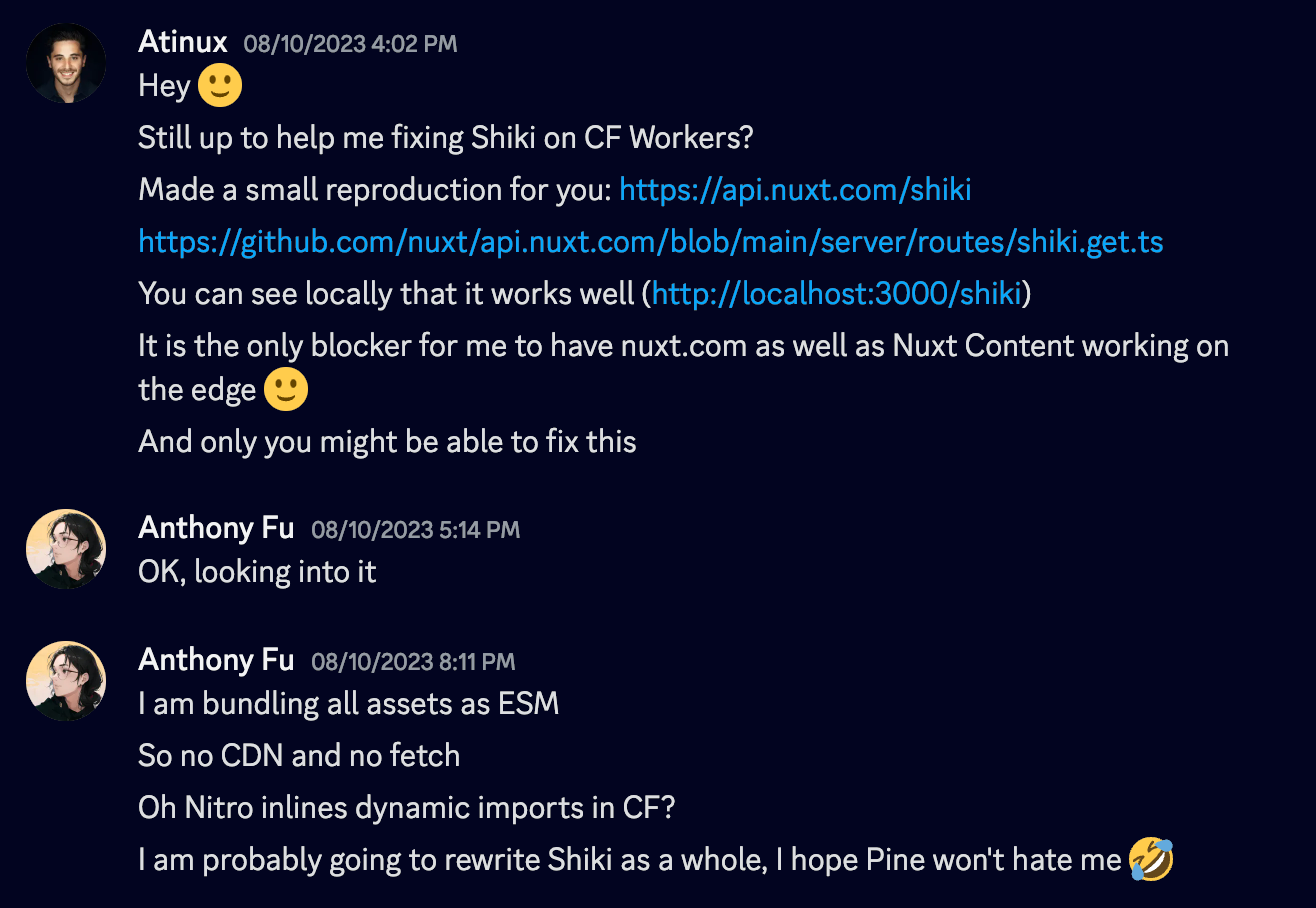

It's started with a chat between Sébastien and me. That we are trying to make Nuxt Content which uses Shiki to highlight the code blocks, to work on the edge.

I started the experiments by patching Shiki locally, to covert the grammars and themes into ECMAScript Module (ESM) so that it can be understood and bundled by the build tools, to create the code bundle for CloudFlare Workers to consume without reading the filesystem nor making network requests.

import fs from 'fs/promises'

const cssGrammar = JSON.parse(await fs.readFile('../langs/css.json', 'utf-8'))

const cssGrammar = await import('../langs/css.mjs').then(m => m.default)

We need to wrap the JSON files into ESM as inline literal so that we can use import() to dynamically import them. The different is that import() is a standard JavaScript feature that works everywhere JavaScript runs, while fs.readFile is a Node.js specific API that only works in Node.js. Having import() statically would also make bundlers like Rollup and webpack able to construct the module relationship graph and emit the bundled code in chunks.

Then I realized that it's actually more than tha to get the worker support properly. Because bundlers expect imports to be resolvable at build time, meaning that in order to support all the languages and themes, we need to listing all the import statements to every single grammar and theme files in the codebase. This would end up with a huge bundle size with a bunch of grammars and themes that you might not actually use. This problem is particular important in edge environment, where the bundle size is critical to the performance.

So, we need to figure out a better middle ground to make it works better.

The Fork - Shikiji

Knowing this might fundamentally change the way Shiki works, and we don't want to risk breaking the existing Shiki users with our experiments, I started a fork of Shiki called Shikiji. Trying to get revise the previous design decisions and start from scratch. The goal is to make Shiki runtime-agnostic, performant and efficient, like the philosophy we had in UnJS.

To make that happen, we need to make Shikiji completely ESM-friendly, pure and tree-shakable. This goes all the way up to the dependencies of Shiki, both vscode-oniguruma and vscode-textmate are provide in Common JS (CJS) format. vscode-oniguruma also contains a WASM binding generated by emscripten that contains dangling promises that will make CloudFlare Workers failed to finish the request. We ended up by embedding the WASM binary into a base64 string and ships as ES module, manually rewrite the WASM binding to avoid dangling promises, and vendored vscode-textmate to compile from it's source code and produce the efficient ESM output.

The end result is pretty promising, we managed to get Shikiji works on any runtime environment, even possible to import it from CDN and run in the browser with a single line of code.

We also took the chance to improve the API and the internal architecture of Shiki. We switched from simple string concatenation to use hast, and Abstract Syntax Tree (AST) format for HTML, for generating the HTML output. This opens up the possibility of having the Transformers API that allows the capability to modify the intermediate HAST and do many cool integrations that would be very hard to achieve previously.

Dark/Light mode support was a frequently requested feature. Because of the static approach Shiki takes, it won't be possible to change the theme on the fly at rendering. The solution in the past was to generate the hightlighted HTML twice, and toggle the visibility of them based on the user's preference - it wasn't efficient as it duplicate the payload, or use CSS variables theme which lose the granular highlighting Shiki is great for. With the new architecture Shikiji has, I took a step back and rethought the problem, and came up with the idea of breaking down the common tokens and merge multiple themes as inlined css variable, provides the efficient output while aligning with the Shiki's philosophy. You can learn more about it in Shiki's documentation.

To make the migration easier, we also created the shikiji-compact compatibility layer, that uses Shikiji's new fundation and provides backward compatibility API for Shiki users to migrate easier.

To get Shikiji work for Cloudflare Workers, we get one extra challenge is that Cloudflare Workers does not support initiating WASM instance from inlined binary data, but requires to import the static .wasm asset, for the security reason. This means that our "All-in-ESM" approach does not work well on CloudFlare. This would requires extra work on users to provide different WASM source, which makes the experience not as smooth as we expected. At this moment, Pooya Parsa step in and made the universal layer unjs/unwasm, which supports the upcoming WebAssembly/ES Module Integration proposal. It has been integrated into Nitro to have automated WASM targets. We hope that unwasm could help the ecosystem to have a better experience with WASM that also align with the standard to be future-proof.

Overall, the Shikiji rewrite seems to worked quite well, we have many tools like Nuxt Content, VitePress, Astro even migrated to it, and the feedbacks we got are very positive. That verifies our rewrite is the direction to go, and it should solve many problems the other users might had in the past.

Merging Back

While I am already a team member of Shiki and was helping to do the releases from time to time, Pine was still the lead of the directions of Shiki. While he was busy on other stuff, the iterations of Shiki has been slow down. During the experiments in Shikiji, I proposed a few improvements that could help Shiki to get a more modern structure. While generally everyone agree with that direction, there would be quite a lot work to do and no one really started to work on that.

While we are happy to use Shikiji to solve the problems we had, we certainly don't want to see the community been separated by two different versions of Shiki. So I made a call with Pine, explained what we have changed in Shikiji and what problem the solve. We made the consensus to merge the two projects into one, and soon we have:

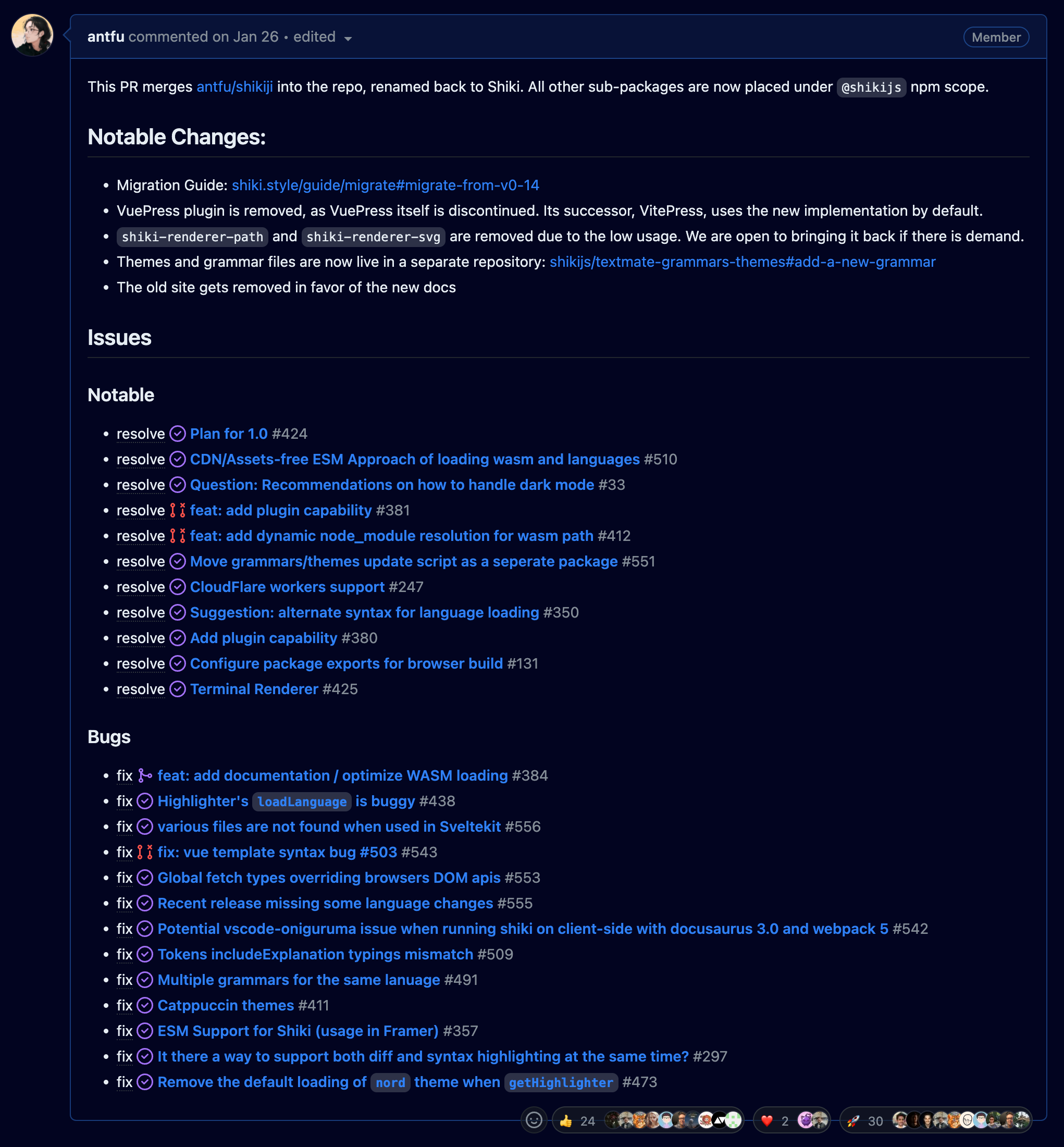

We are really happy to see that our work in Shikiji has been merged back to Shiki, that not only works for ourselves, but also make benefit the entire community. With the merge, it solves around 95% of the open issue we had in Shiki for years:

TwoSlash

// TODO

Conclusions

Our mission in Nuxt is not only make a better framework for developers, but also to make entire frontend and web ecosystem a better place. We are keeping pushing the boundaries and endorse the modern web standards and best practices. We hope you enjoy the new Shiki, unwasm, Twoslash and many other tools we made in the process of making Nuxt and the web better.